Effective Modern Python 2018

Contents

- Type Annotation

- Data Classes

- Packaging and Dependency Management

Type Annotation

Some benefits of type annotations

- Static code analysis prior to runtime

- PyCharm (IntelliJ)

- mypy

- Self-documenting

- Code itself is a document

- Code completion

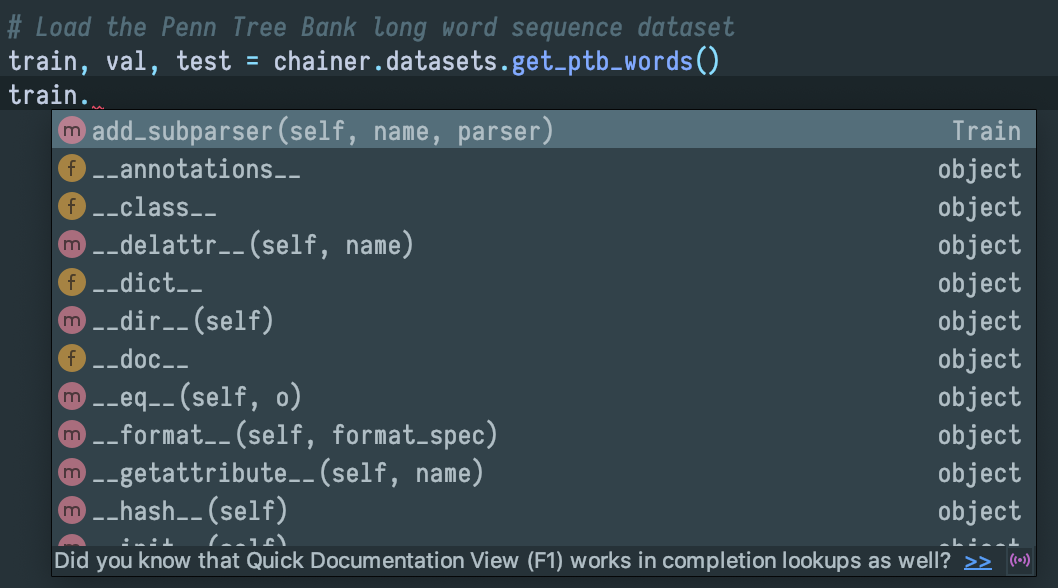

Chainer RNNLM Example

train, val, test = chainer.datasets.get_ptb_words()

- Type of

train,val,test? - What kind of operations can be done on these variables?

🤔

Go to Declaration

def get_ptb_words():

"""Gets the Penn Tree Bank dataset as long word sequences.

[...]

Returns:

tuple of numpy.ndarray: Int32 vectors of word IDs.

"""

train = _retrieve_ptb_words('train.npz', _train_url)

valid = _retrieve_ptb_words('valid.npz', _valid_url)

test = _retrieve_ptb_words('test.npz', _test_url)

return train, valid, test

- 😸 docstring

- 😿 IDEs can’t parse complicated types

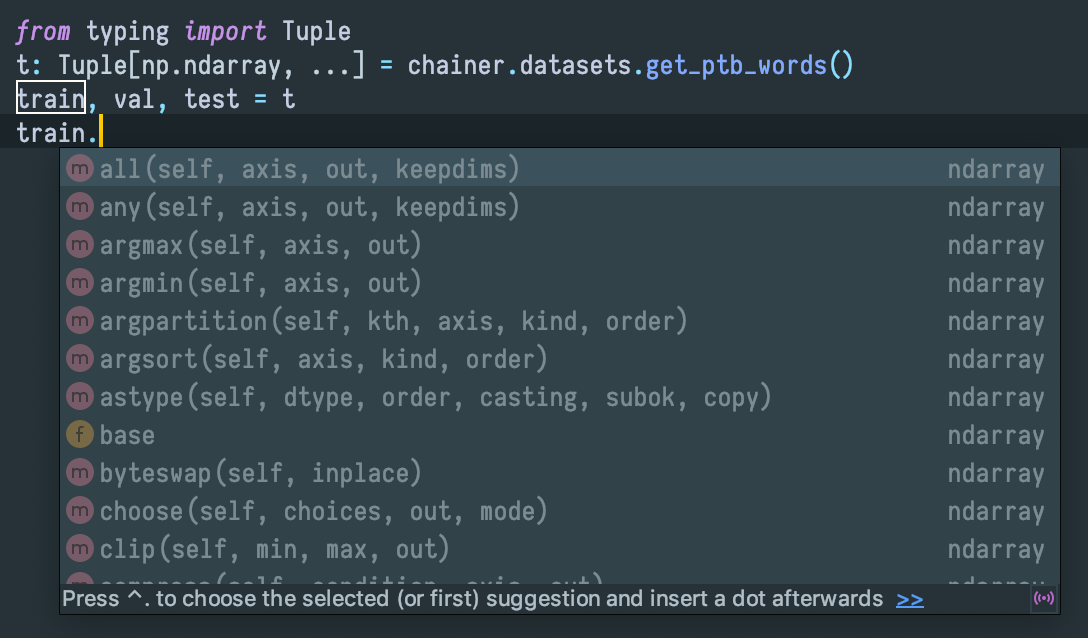

Add Type Annotations

from typing import Dict

def load_vocab(vocabfile: str) -> Dict[int, str]:

[...]

# typed!

vocab = load_vocab(vocabfile)

If you can’t modify function declaration…

# function of other libraries

def load_vocab(vocabfile):

[...]

from typing import Dict

# typed!

vocab: Dict[int, str] = load_vocab(vocabfile)

🤗

Data Classes

Easier Way to Write Classes 🤗

from dataclasses import dataclass

from typing import Dict, Optional

@dataclass

class Vocabulary:

padding_token: str

oov_token: str

max_vocab_size: int

pretrained_files: Optional[Dict[str, str]] = None

- Similar to Scala’s Case Classes

Conventional Class 🤔

class Vocabulary:

def __init__(

self,

padding_token: str,

oov_token: str,

max_vocab_size: int,

pretrained_files: Optional[Dict[str, str]] = None,

):

self.padding_token = padding_token

self.oov_token = oov_token

self.max_vocab_size = max_vocab_size

self.pretrained_files = pretrained_files

def __eq__(self, other):

if isinstance(self, other.__class__):

return self.__dict__ == other.__dict__

return False

- Documentation (en / ja)

- attrs library is also recommended

- more features than Data Classes

- move faster

Packaging and Dependency Management

- How to reproduce your environment?

- How to make your program

pipinstallable?

Other languages have great tools

- Scala:

sbt - JavaScript:

npm,yarn - Rust:

cargo - Go:

dep - Python: …🤔

Ancienty Way 🤔

- Dump all installed packages and force to download them all:

$ pip freeze > requirements.txt

$ pip install -r requirements.txt

- Need to manage virtual environment at your own responsibility

- Don’t you have unnecessary dependency?

- How is version compatibility of Python and libraries?

- Metadata management?

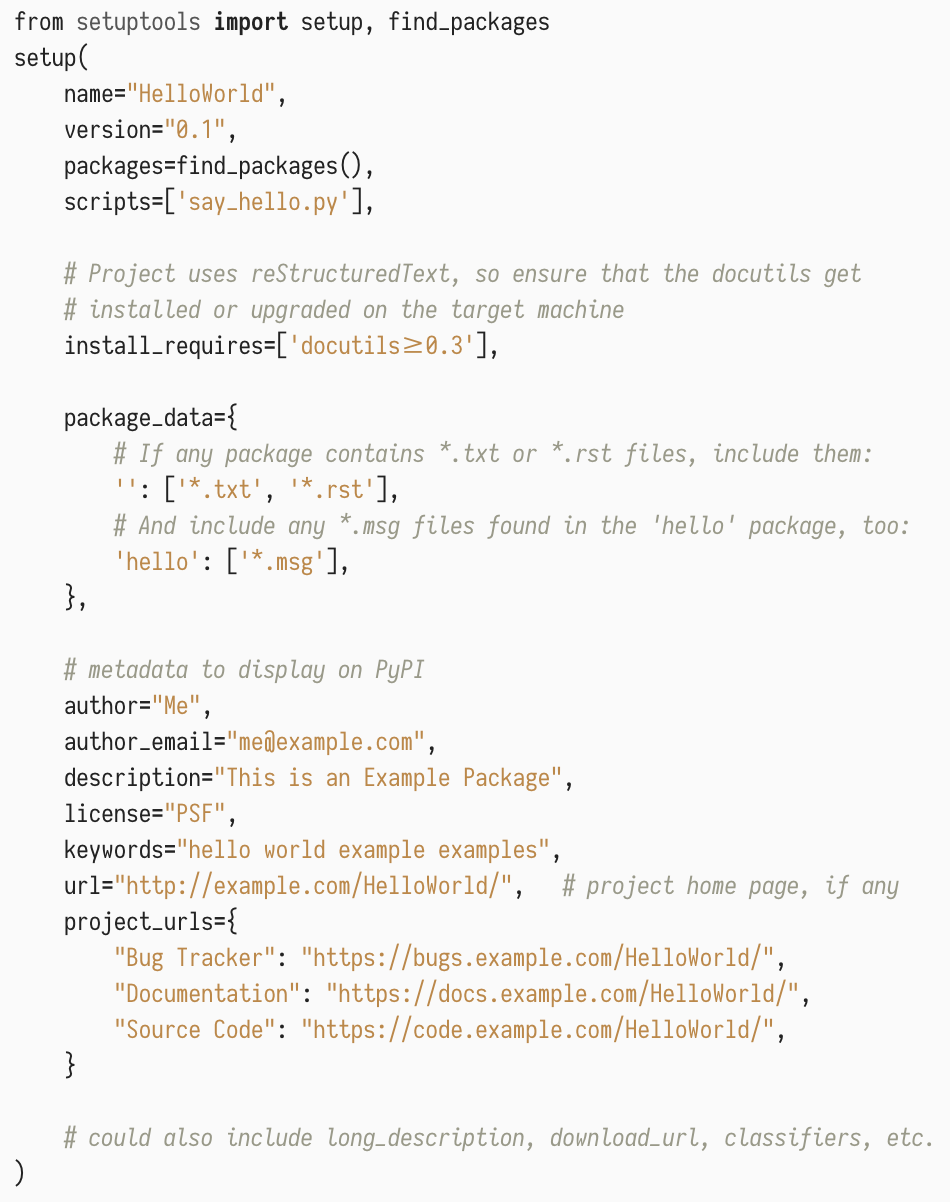

Medieval Way 🤔

- Use standard Setuptools

- Messy documentation

- Need to write messy

setup.py

Modern Way 🤗

- Use Pipenv, Hatch, Flit, or Poetry

- Work as another

pip - Clean documentation

- Work as another

- I personally recommend Poetry

$ poetry init

$ poetry add chainer # equivalent to `pip install chainer`

$ poetry build # make wheels

$ poetry publish # upload to PyPI

# pip installable after this command 🤗

Further Readings

- Type Annotation

- Data Classes

- Packaging and Dependency Management

Effective Modern Python 2018

Ryo Takahashi